Multi-class semantic segmentation of pediatric chest radiographs

Abstract

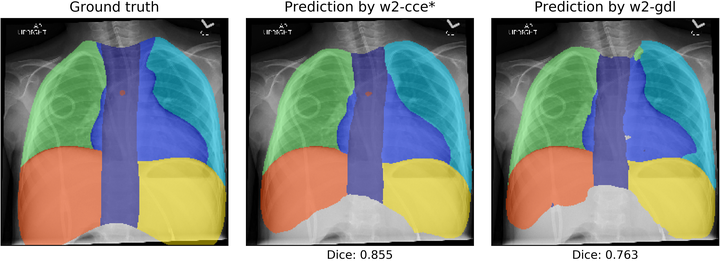

Chest radiographs are a common diagnostic tool in pediatric care, and several computer-augmented decision tasks for radiographs would benefit from knowledge of the anatomic locations within the thorax. For example, a pre-segmented chest radiograph could provide context for algorithms designed for automatic grading of catheters and tubes. This work develops a deep learning approach to automatically segment chest radiographs into multiple regions to provide anatomic context for future automatic methods. This type of segmentation offers challenging aspects in its goal of multi-class segmentation with extreme class imbalance between regions. In an IRB-approved study, pediatric chest radiographs were collected and annotated with custom software in which users drew boundaries around seven regions of the chest: left and right lung, left and right subdiaphragm, spine, mediastinum, and carina. We trained a U-Net-style architecture on 328 annotated radiographs, comparing model performance with various combinations of loss functions, weighting schemes, and data augmentation. On a test set of 70 radiographs, our best-performing model achieved 93.8% mean pixel accuracy and a mean Dice coefficient of 0.83. We find that (1) cross-entropy consistently outperforms generalized Dice loss, (2) light augmentation, including random rotations, improves overall performance, and (3) pre-computed pixel weights that account for class frequency provide small performance boosts. Overall, our approach produces realistic eight-class chest segmentations that can provide anatomic context for line placement and potentially other medical applications.